Django, Docker, and PostgreSQL Tutorial

Updated

Table of Contents

This tutorial will create a new Django project using Docker and PostgreSQL. Django ships with built-in SQLite support, but even for local development, you are better off using a "real" database like PostgreSQL that matches what is in production.

It's possible to run PostgreSQL locally using a tool like Postgres.app,; the preferred choice among many developers today is to use Docker, a tool for creating isolated operating systems. The easiest way to think of it is as a large virtual environment that contains everything needed for our Django project: dependencies, database, caching services, and any other tools required.

A big reason to use Docker is that it completely removes any issues around local development setup. Instead of worrying about which software packages are installed or running a local database alongside a project, you run a Docker image of the entire project. Best of all, this can be shared in groups, simplifying team development.

Install Docker

The first step is to install the desktop Docker app for your local machine:

The initial download of Docker might take some time to download. It is a big file. Feel free to stretch your legs at this point!

Once Docker is done installing, we can confirm the correct version is running. In your terminal, run the command docker --version.

$ docker --version

Docker version 24.0.5, build ced0996

Docker Compose is an additional tool that is automatically included with Mac and Windows downloads of Docker. However, if you are on Linux, you must add it manually. You can do this by running the command sudo pip install docker-compose after completing your installation.

Hopefully, Docker will be installed by this point. To confirm the installation was successful, quit the local server with Control+c and then type docker run hello-world on the command line. You should see a response like this:

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

70f5ac315c5a: Pull complete

Digest: sha256:4f53e2564790c8e7856ec08e384732aa38dc43c52f02952483e3f003afbf23db

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(arm64v8)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Docker is now properly installed. We can proceed to configure a local Django setup and switch to Docker and PostgreSQL.

Django Set Up

The code for this project can live anywhere on your computer, but the Desktop is an accessible location for teaching purposes. On the command line, navigate to the desktop and create a new directory called django-docker.

# Windows

$ cd onedrive\desktop

$ mkdir django-docker

# macOS

$ cd ~/desktop/code

$ mkdir django-docker

We will follow the standard steps for creating a new Django project: make a dedicated virtual environment, activate it, and install Django.

# Windows

$ python -m venv .venv

$ Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

$ .venv\Scripts\Activate.ps1

(.venv) $ python -m pip install django~=5.0.0

# macOS

$ python3 -m venv .venv

$ source .venv/bin/activate

(.venv) $ python3 -m pip install django~=5.0.0

Next, we can create a new project called django_project, migrate our database to initialize it and use runserver to start the local server. I usually don't recommend running migrate on new projects until after a custom user model has been configured, but in this tutorial, we will ignore that advice.

(.venv) $ django-admin startproject django_project .

(.venv) $ python manage.py migrate

(.venv) $ python manage.py runserver

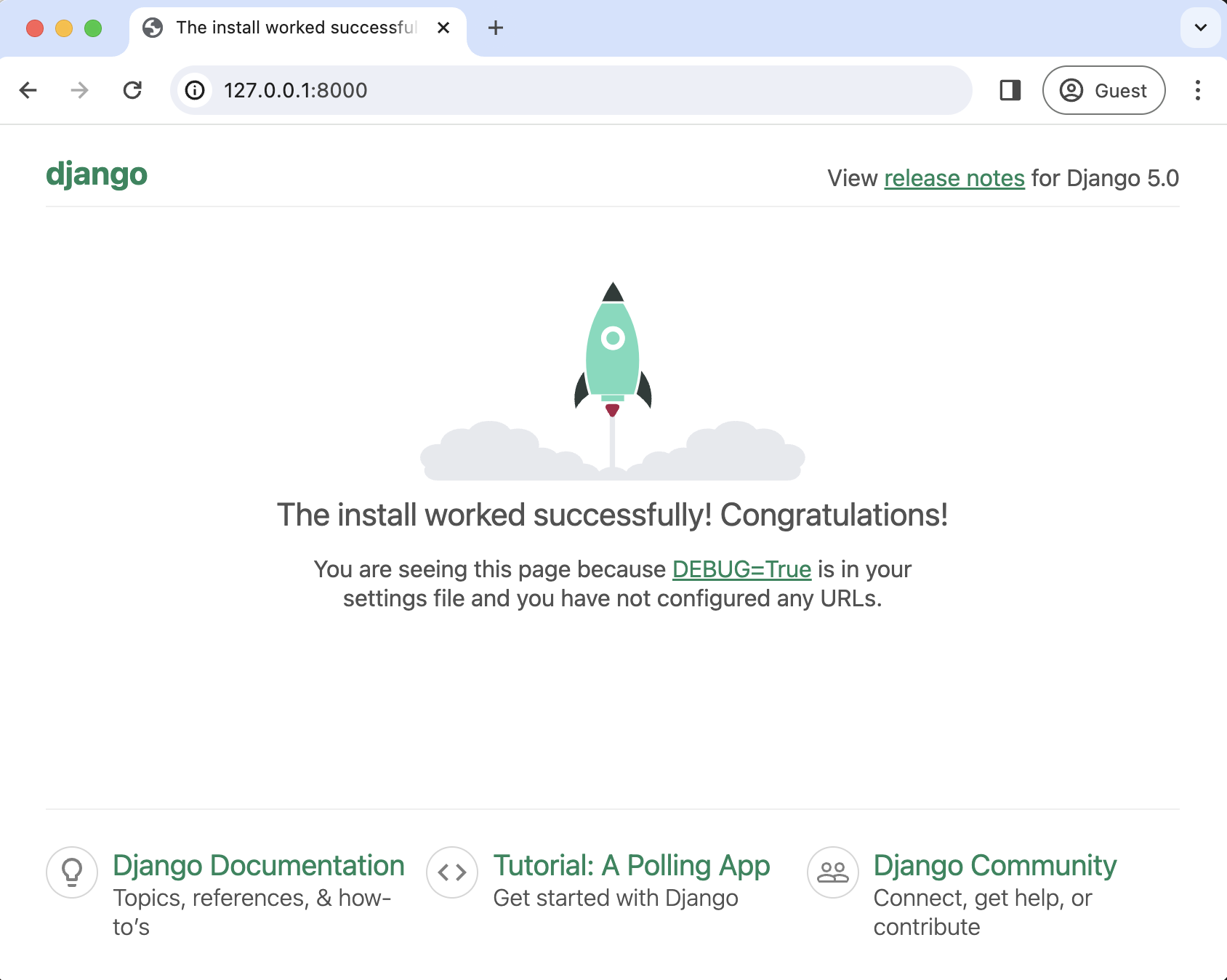

Confirm everything worked by navigating to http://127.0.0.1:8000/ in your web browser. You may need to refresh the page, but you should see the familiar Django welcome page.

The last step before switching over to Docker is creating a requirements.txt file with the contents of our current virtual environment. We can do this with a one-line command.

(.venv) $ pip freeze > requirements.txt

In your text editor, inspect the newly-created requirements.txt file.

asgiref==3.7.2

Django==5.0.4

sqlparse==0.4.4

It should contain Django and the packages asgiref and sqlparse, which are automatically included when Django is installed.

Now it's time to switch to Docker. Exit our virtual environment since we no longer need it by typing deactivate and Return.

(.venv) $ deactivate

$

How do we know the virtual environment is no longer active? There will no longer be parentheses around the directory name on the command line prompt. Any standard Django commands you try to run at this point will fail. For example, try python manage.py runserver to see what happens.

$ python manage.py runserver

File "/Users/wsv/Desktop/django-docker/manage.py", line 11, in main

from django.core.management import execute_from_command_line

ModuleNotFoundError: No module named 'django'

This error message means we're entirely out of the virtual environment and ready for Docker.

Docker Image

A Docker image is a read-only template that describes how to create a Docker container. The image is the instructions, while the container is the actual running instance of an image. To continue our apartment analogy from earlier in the chapter, an image is the blueprint or set of plans for building an apartment; the container is the actual, fully built building.

Images are often based on another image with some additional customization. For example, there is a long list of officially supported images for Python depending on the version and flavor of Python desired.

Dockerfile

We need to create a custom image for our Django project that contains Python but also installs our code and has additional configuration details. To build our image, we make a file known as a Dockerfile that defines the steps to create and run the custom image.

Use your text editor to create a new Dockerfile file in the project-level directory next to the manage.py file. Within it, add the following code, which we'll walk through line-by-line below.

# Pull base image

FROM python:3.11.5-slim-bullseye

# Set environment variables

ENV PIP_DISABLE_PIP_VERSION_CHECK 1

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

# Set work directory

WORKDIR /code

# Install dependencies

COPY ./requirements.txt .

RUN pip install -r requirements.txt

# Copy project

COPY . .

Dockerfile's are read from top-to-bottom when an image is created. The first instruction is a FROM command that tells Docker what base image we want to use for our application. Docker images can be inherited from other images, so instead of creating our base image, we'll use the official Python image with all the tools and packages we need for our Django application. In this case, we're using Python 3.10.2 and the much smaller in size slim variant that does not contain the common packages contained in the default tag. The tag bullseye refers to the latest stable Debian release. Set this explicitly to minimize potential breakage when there are new releases of Debian.

Then, we use the ENV command to set three environment variables:

PIP_DISABLE_PIP_VERSION_CHECKturns off an automatic check forpipupdates each timePYTHONDONTWRITEBYTECODEmeans Python will not try to write.pycfilesPYTHONUNBUFFEREDensures Docker does not buffer our console output

The command WORKDIR sets a default working directory when running the rest of our commands. This working directory is used by Docker as the default location for all subsequent commands. As a result, we can use relative paths based on the working directory rather than typing out the full file path each time. In our case, the working directory is /code, but it can often be much longer and something like /app/src, /usr/src/app, or similar variations depending upon the specific needs of a project.

The next step is to install our dependencies with pip and the requirements.txt file we created. The COPY command takes two parameters: the first parameter tells Docker what file(s) to copy into the image, and the second parameter tells Docker where you want the file(s) to be copied to. In this case, we are copying the existing requirements.txt file from our local computer into the current working directory, which is represented by ..

Once the requirements.txt file is inside the image we can use our last command, RUN, to execute pip install. This command works exactly the same as if we were running pip install locally on our machine, but this time the modules are installed into the image. The -r flag tells pip to open a file--called requirements.txt here--and install its contents. If we did not include the -r flag pip would try and fail to install requirements.txt since it isn't itself an actual Python package.

At the moment, we have a new image based on the slim-bullseye variant of Python 3.11.5 and have installed our dependencies. The final step is to copy all the files in our current directory into the working directory on the image. We can do this by using the COPY command. Remember it takes two parameters so we'll copy the current directory on our local filesystem (.) into the working directory (.) of the image.

If you're confused right now don't worry. Docker is a lot to absorb, but the good news is that the steps involved to "Dockerize" an existing project are very similar.

.dockerignore

A .dockerignore file is a best practice way to specify certain files and directories that should not be included in a Docker image. This can help reduce overall image size and improve security by keeping things that are meant to be secret out of Docker.

We can safely ignore the local virtual environment (.venv), a future .git directory, and a future .gitignore file. In your text editor, create a new file called .dockerignore in the base directory next to the existing manage.py file.

# .dockerignore

.venv

.git

.gitignore

We now have complete instructions for creating a custom image, but we haven't built it yet. The command to do this is docker build followed by the period, ., indicating the Dockerfile in the current directory. There will be a lot of output here. I've only included the first two lines and the last one.

$ docker build .

[+] Building 9.1s (10/10) FINISHED

=> [internal] load build definition from Dockerfile

...

=> => writing image sha256:89ede1...

docker-compose.yml

Our fully built custom image is now available to run as a container. To run the container we need a list of instructions in a file called docker-compose.yml. Create a docker-compose.yml file with your text editor in the project-level directory next to the Dockerfile. It will contain the following code.

# docker-compose.yml

services:

web:

build: .

ports:

- "8000:8000"

command: python manage.py runserver 0.0.0.0:8000

volumes:

- .:/code

Docker recently switched away from Compose V1 to Compose V2. To use V2, we specify which services (or containers) we want running within our Docker host. It's possible to have multiple services running, but we have one for web for now.

Within web, we set build to look in the current directory for our Dockerfile. We'll use the Django default ports of 8000 and execute the command to run the local web server. Finally, the volumes mount automatically syncs the Docker filesystem with our local computer's filesystem. If we change the code within Docker, it will automatically be synced with the local filesystem.

The final step is running our Docker container using the command docker-compose up, resulting in another long stream of output code on the command line.

$ docker-compose up

[+] Building 4.2s (10/10) FINISHED

=> [internal] load build definition from Dockerfile

Step 1/7 : FROM python:3.10

...

Attaching to docker-web-1

django-docker-example-web-1 | Watching for file changes with StatReloader

django-docker-example-web-1 | Performing system checks...

django-docker-example-web-1 |

django-docker-example-web-1 |

django-docker-example-web-1 | System check identified no issues (0 silenced).

django-docker-example-web-1 | October 01, 2023 - 11:24:05

django-docker-example-web-1 | Django version 4.2.5, using settings 'django_project.settings'

django-docker-example-web-1 | Starting development server at http://0.0.0.0:8000/

django-docker-example-web-1 | Quit the server with CONTROL-C.

django-docker-example-web-1 |

django-docker-example-web-1 |

To confirm it worked, return to http://127.0.0.1:8000/ in your web browser. Refresh the page; the "Hello, World" page should still appear.

Django is now running purely within a Docker container. We are not working within a virtual environment locally. We did not execute the runserver command. Our code now exists, and our Django server runs within a self-contained Docker container. Success!

With practice, the flow will start to make more sense.:

- create a

Dockerfilewith custom image instructions - add a

.dockerignorefile - build the image

- create a

docker-compose.ymlfile - spin up the container(s)

Stop the currently running container with Control+c (press the "Control" and "c" button at the same time) and additionally type docker-compose down. Docker containers take up a lot of memory, so it's a good idea to stop them when you're done using them. Containers are meant to be stateless, so we use volumes to copy our code over locally, where it can be saved.

$ docker-compose down

[+] Running 2/2

⠿ Container docker-web-1 Removed

⠿ Network docker_default

$

Whenever any new technology is introduced, there are potential security concerns. In Docker's case, one example is that it sets the default user to root. The root user (also known as the "superuser" or "admin") is a special user account used in Linux for system administration. It is the most privileged user on a Linux system and can access all commands and files. The Docker docs contain an extensive section on security and specifically on rootless mode. We will not be covering it here since this is a book on Django, not Docker, but if your website stores sensitive information, review the entire security section closely before going live.

psycopg

It's important to pause right now and think about what it means to install a package into Docker instead of a local virtual environment. In a traditional project, we'd run the command python -m pip install "psycopg[binary]" from the command line to install Pyscopg. But we're working with Docker now.

There are two options. The first is to install "psycopg[binary]" locally and then pip freeze our virtual environment to update requirements.txt. If we were going to use the local environment, this might make sense. But since we are committed to Docker, we can skip that step and update requirements.txt with the "psycopg[binary]" package instead. We don't need to update the actual virtual environment further because it is unlikely we will be using it. And if we ever did, we can update it based on requirements.txt anyway.

Open the existing requirements.txt file in your text editor and add `"psycopg[binary]" to the bottom.

asgiref==3.5.0

Django==5.0.0

sqlparse==0.4.2

psycopg[binary]==3.1.12

We will build the new image and spin up our containers at the end of our PostgreSQL configuration changes. But not yet.

PostgreSQL

In the existing docker-compose.yml file, add a new service called db, which means two separate containers will be running within our Docker host: web for the Django local server and db for our PostgreSQL database.

The web service depends on the db service to run, so we'll add a line called depends_on to web signifying this.

Within the db service, we specify which version of PostgreSQL to use. As of this writing, Heroku supports version 14 as the latest release, so that is what we will use. Docker containers are ephemeral, meaning all information is lost when the container stops running--an obvious problem for our database! The solution is to create a volumes mount called postgres_data and then bind it to a dedicated directory within the container at the location /var/lib/postgresql/data/. The final step is to add a trust authentication to the environment for the db. It is recommended to be more explicit with permissions for large databases with many database users, but this setting is a good choice when there is just one developer.

Here is what the updated file looks like:

# docker-compose.yml

services:

web:

build: .

command: python /code/manage.py runserver 0.0.0.0:8000

volumes:

- .:/code

ports:

- 8000:8000

depends_on:

- db

db:

image: postgres:14

volumes:

- postgres_data:/var/lib/postgresql/data/

environment:

- "POSTGRES_HOST_AUTH_METHOD=trust"

volumes:

postgres_data:

DATABASES

The third and final step is to update the django_project/settings.py file to use PostgreSQL and not SQLite. Within your text editor, scroll down to the DATABASES config.

By default, Django specifies sqlite3 as the database engine, gives it the name db.sqlite3, and places it at BASE_DIR in our project-level directory.

# django_project/settings.py

DATABASES = {

"default": {

"ENGINE": "django.db.backends.sqlite3",

"NAME": BASE_DIR / "db.sqlite3",

}

}

To switch over to PostgreSQL, we will update the ENGINE configuration. PostgreSQL requires a NAME, USER, PASSWORD, HOST, and PORT. For convenience, we'll set the first three to postgres, the HOST to db, which is the name of our service set in docker-compose.yml, and the PORT to 5432, the default PostgreSQL port.

# django_project/settings.py

DATABASES = {

"default": {

"ENGINE": "django.db.backends.postgresql",

"NAME": "postgres",

"USER": "postgres",

"PASSWORD": "postgres",

"HOST": "db", # set in docker-compose.yml

"PORT": 5432, # default postgres port

}

}

And that's it! We can build our new image containing psycopg[binary] and spin up the two containers in detached mode with the following single command:

$ docker-compose up -d --build

If you refresh the Django welcome page at http://127.0.0.1:8000/ it should work, which means Django has successfully connected to PostgreSQL via Docker.

Running commands within Docker differs slightly from a traditional Django project. For example, to migrate the new PostgreSQL database running in Docker execute the following command:

$ docker-compose exec web python manage.py migrate

If you wanted to run createsuperuser you'd also prefix it with docker-compose exec web... so:

$ docker-compose exec web python manage.py createsuperuser

And so on. When you're done, remember to close down your Docker container since it can consume a lot of computer memory.

$ docker-compose down

Quick Review

Here is a short version of the terms and concepts we've covered in this post:

- Image: the "definition" of your project

- Container: what your project runs in (an instance of the image)

- Dockerfile: defines what your image looks like

- docker-compose.yml: a YAML file that takes the Dockerfile and adds additional instructions for how our Docker container should behave in production

We use the Dockerfile to tell Docker how to build our image. Then, we run our actual project within a container. The docker-compose.yml file provides additional information about how our Docker container should behave in production.

Next Steps

If you'd like to learn more about using Django, Docker, and PostgreSQL, I've written an entire book on the subject, Django for Professionals. The first several chapters are free to read online.